Building Trust in the Age of Autonomous Systems: A CISO's Perspective on AI Governance

As the Chief Information Security Officer (CISO) at my company, I've witnessed firsthand how Artificial Intelligence (AI) is rapidly transforming our operations, from automating processes to informing critical decisions. This technological advancement promises remarkable benefits, but it also introduces a complex landscape of risks that demand our immediate and strategic attention. My top priority is ensuring that as we embrace the power of AI and autonomous systems, we do so in a way that is not only innovative and efficient but also fundamentally trustworthy, secure, and compliant. This requires a dedicated, multi-layered approach to AI governance and security.

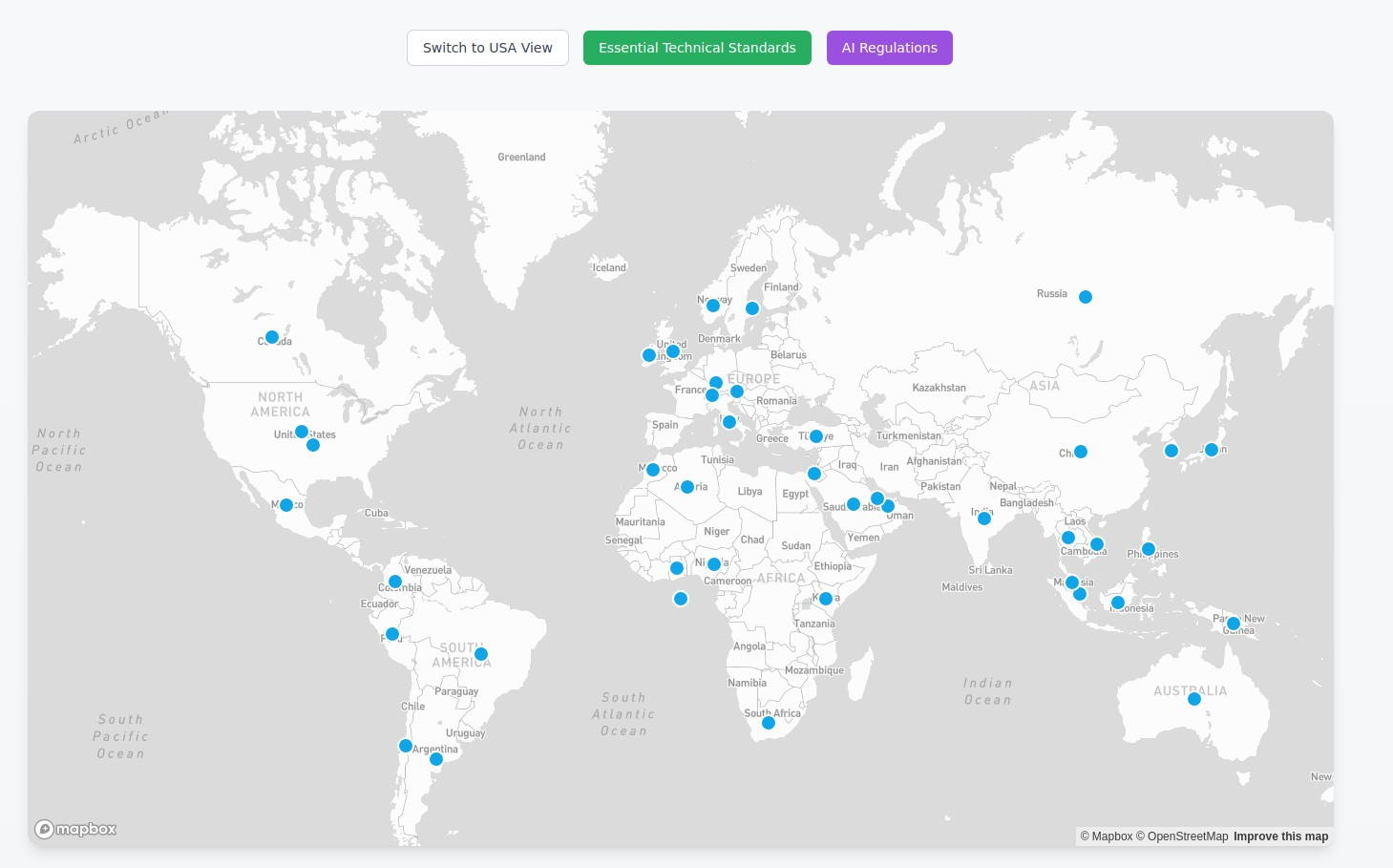

The global regulatory environment for AI is evolving quickly, with significant frameworks emerging, notably from the European Union and the United States. The EU AI Act, for instance, represents a comprehensive legal framework designed to foster trustworthy AI by implementing risk-based rules for developers and deployers. It categorizes AI systems by risk level – unacceptable, high, limited, and minimal/no risk – with stringent obligations, including conformity assessments and post-market monitoring, for high-risk systems. This approach is not just a European concern; its potential extraterritorial effect means we must understand and prepare for its requirements if we operate or intend to operate within the EU market.

In the United States, the approach is also taking shape, recognizing that while existing laws address some risks, new guidance and measures are needed. A key resource we leverage is the NIST AI Risk Management Framework (AI RMF). The AI RMF is a voluntary framework designed to help organizations manage AI risks and promote trustworthy and responsible AI throughout its lifecycle. It provides a structured way for us, as "AI actors," to think about and address these challenges.

The AI RMF is built around four core functions: GOVERN, MAP, MEASURE, and MANAGE. As a CISO, the GOVERN function is foundational to establishing our organization's approach to AI risk. It's about cultivating a culture of risk management, defining policies, roles, and responsibilities, and establishing clear accountability structures. Effective risk management, including AI risks, needs to be integrated into our broader enterprise risk management strategies. This means executive leadership must take responsibility for decisions about AI system development and deployment risks, and we need clear lines of communication for managing those risks.

The MAP function helps us understand the context of our AI systems, identify potential risks, categorize the systems based on their tasks and methods, and characterize the potential impacts on individuals, groups, and society. This involves engaging with interdisciplinary teams and stakeholders to ensure a broad understanding of potential effects.

MEASURE is about developing objective methods to evaluate AI system trustworthiness and identify risks, providing a traceable basis for decision-making. This leads into the MANAGE function, where we prioritize risks, implement controls, and manage risks, including those introduced by third-party components in the AI supply chain.

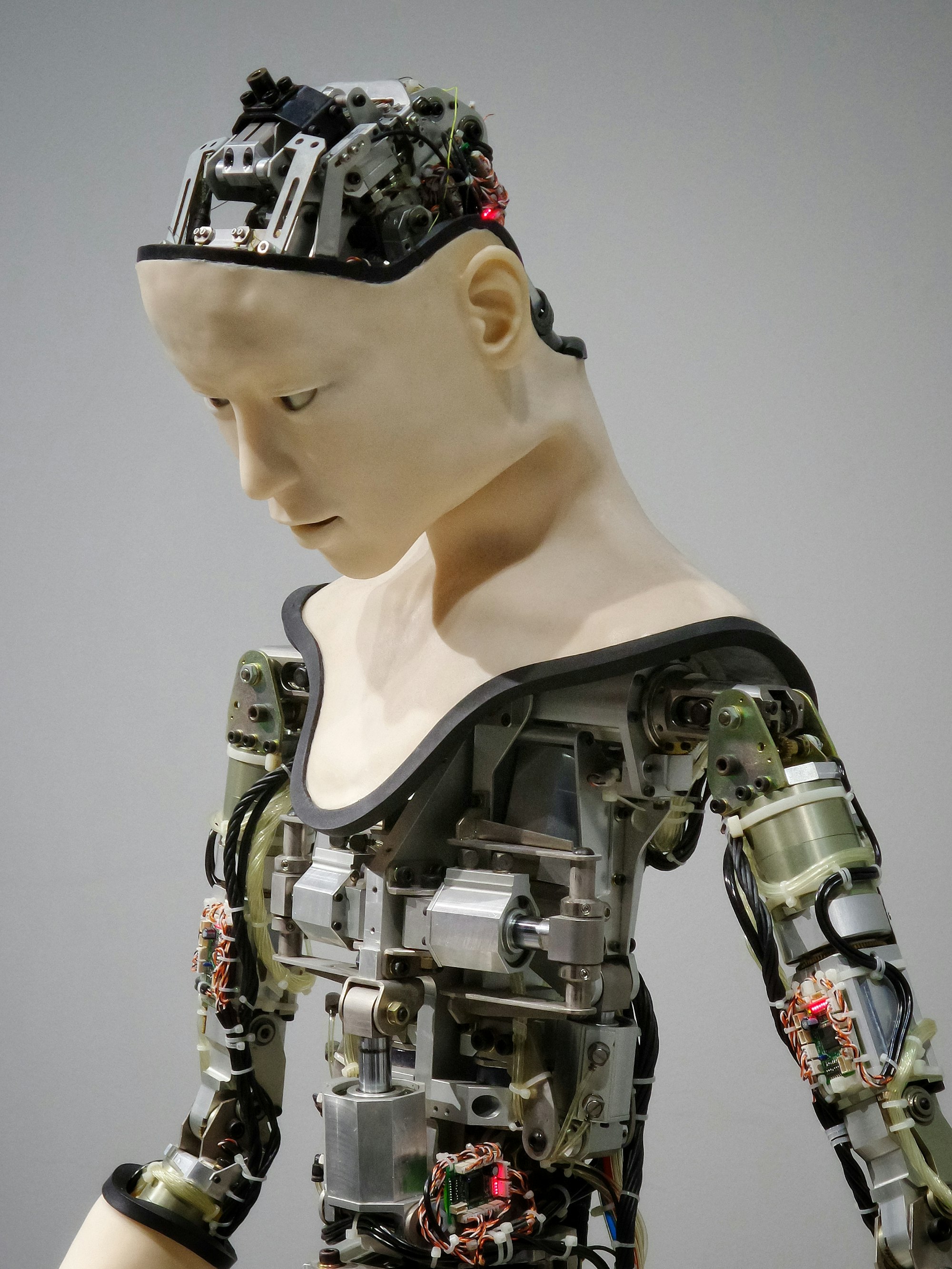

From a security perspective, managing risks from the AI supply chain is particularly critical. Autonomous systems and AI models often rely on components and data from various sources, expanding our attack surface. We need to apply trust controls to anything entering our training and development environments and ensure the security of the infrastructure itself. This mirrors our existing cybersecurity efforts but requires specific considerations for AI's unique vulnerabilities. Tools like CISA's Autonomous Vehicle Cyber-Attack Taxonomy (AV|CAT) can help us conceptualize specific attack sequences and potential impacts in relevant domains like transportation.

A critical element of AI security and trustworthiness is transparency and explainability. We need to understand how AI systems arrive at their decisions, the data they use, and their limitations. This is not only crucial for compliance in some sectors but also essential for building trust with users and identifying potential issues like unfair biases or unexpected behaviors. As a CISO, I need to ensure our technical teams can provide explanations that are understandable to business leaders, legal teams, and even affected individuals.

Effective AI governance and security also demands robust monitoring and incident response capabilities. We must have processes in place to identify and respond to security incidents and vulnerabilities associated with our AI systems. This includes deciding on appropriate levels of logging for generative AI systems to enable monitoring, auditing, and incident response. Leveraging human oversight to investigate flagged anomalies is a key part of this process.

Achieving sound AI governance requires a shift in organizational culture and investing in our people. This means ensuring that our developers, data scientists, and even end-users receive training on AI ethics, security threats, and responsible practices. Fostering a "safety-first mindset" throughout the AI lifecycle is paramount.

Our approach is to integrate these AI-specific security and risk management practices into our existing cybersecurity strategies. This involves formalizing collaboration across security functions and integrating physical security and cybersecurity best practices where AI intersects with the physical world, such as with autonomous vehicles.

Implementing strong AI governance and security frameworks, like those outlined in the NIST AI RMF, is not merely a compliance exercise; it's about ensuring the trustworthiness of our AI systems. By establishing clear accountability, mapping and managing risks, securing the supply chain, prioritizing explainability, and empowering our teams with the right knowledge, we can navigate the complexities of AI and autonomous systems, unlocking their transformative potential while protecting our organization and our stakeholders. This is an ongoing journey, requiring continuous evaluation and adaptation as the technology and the threat landscape evolve.